Is construction growing or has it gone temporarily into reverse?

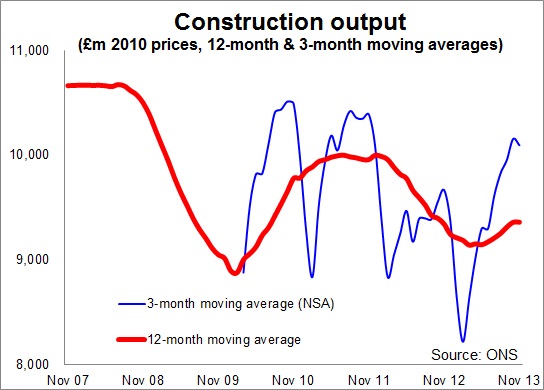

The Office for National Statistics came up with a figure that showed construction dropped 4% from October to November. It also came up with a figure that said output in November 2013 was 2.2% more than in November 2012.

This may deflate some and confuse others because other data seem to show construction activity rocketing – just look at the data from Markit/CIPS or RICS.

There is also a widespread mood of optimism afoot. The latest ONS data just didn’t fit with the increasingly accepted narrative that construction is booming.

There is also a widespread mood of optimism afoot. The latest ONS data just didn’t fit with the increasingly accepted narrative that construction is booming.

So are ONS data wrong? Or are other data wrong?

Before seeking to answer that directly, let’s consider whether the latest construction output data were really a big surprise?

Short answer: No, at least not for anyone who has followed them for any length of time.

The industry is a lumpy thing. The data are volatile. So, a growth path of two steps forward and one back is nothing freaky.

Furthermore, whatever amount of accuracy you can put into your seasonal adjustment, weighting and deflating of the raw data you will end up with errors and uncertainties. That’s the nature of statistics and construction is at the rawer end of the game.

The truth is that the industry is a muddy mix of businesses and business activities and can be impacted quite a bit by bad weather.

Measuring construction is a nightmare. That’s assuming you can come up with a decent definition and a reasonable approach to collecting some half-decent numbers. Even the ONS data, which are the most meticulously collected, are subject to some very awkward lags. For example, firms might provide a record of their activities possibly from invoices, possibly from receipt of payment.

But what can you do?

So even if you apply consistent rules for collection and processing, with construction data there’s a fair chance you’ll find you get results from time to time that seem a bit odd.

So, no, the latest ONS data were not that puzzling, even to me who (perhaps foolishly) earlier today suggested that the ONS data might show more growth. Well I was wrong. It didn’t happen.

Am I worried? No. These are just one month’s data and subject to revisions – very possibly upward. No one with half a brain and a working knowledge of the industry data would take too much notice of a single month’s figures in construction. And frankly on a moving 12-month basis (my preferred ready-reckoner measure of growth) the industry is still chugging upward – just.

But are they wrong? Well almost certainly, yes. All data in construction (well probably in general) tend to be wrong and sometimes very wrong. The question is how wrong are they?

There are problems, of course there are. But as a basis for measuring the industry they are the best we have. What’s more the ONS listens to problems and, within its ability to find the resources, does seek to improve the data.

It must be remembered too that no other survey seeks to measure the actual size of construction. They tend to measure change. That’s a much easier task. Not that measuring change is as straightforward as it may seem or some like to suggest.

So, are these other surveys right or wrong?

Here I fear I may sound a bit wishy-washy. The problem is, in my view, most often the interpretation rather than the data.

Let’s consider the commentary provided in December by the data provider Markit when it released its Construction PMI – its stab at construction activity for November.

The assessment was: “November data pointed to another strong upturn in the UK construction sector, with output and employment both rising at the sharpest rate since August 2007.”

Hell’s teeth that sounds impressive. And you can see why there were plenty of people bamboozled by the release today of the ONS November number showing a fall. That certainly could lead people to wonder why in the same month ONS somehow found construction seemingly heading in reverse.

Personally I’m not sure that the Markit survey can give you a clear view of the precise growth rate on a month-by-month. There are a number of reasons for this.

It surveys purchasing managers. What’s the proportion of construction firms that have such people?

It has a survey size of fewer than 200. This is across an industry of great diversity. So how representative can this be? The company blurb says: “PMIs are based on monthly surveys of carefully selected companies.” I shudder at “specially selected” and wonder about selection bias. Oddly I’d be happier with “randomly selected”.

But with such a small sample how does it scale to cover the whole industry with the patchwork of regional and sector coverage of firms? How does it deal with survivor bias? How does it deal with weighting? How effective is any seasonal adjustment? How do respondents adjust for special factors? How does the survey deal with non-responding firms? And I could ask a host more questions.

Frankly all the above doesn’t matter that much if it’s just seen as a quick snapshot business indicator. As a snapshot indicator it is useful.

But all the above does matter if it is being compared directly against the ONS construction output data.

It is important to note that it doesn’t measure actual volume, to my knowledge. In crude terms it measures those that are seeing more against those that are seeing less. You can do this in a number of fancy ways, with neat weightings and everything.

But while adjustments can be made, the likelihood is that you will tend to get high scores when lots of people are seeing growth even if the growth is fairly modest. So a shallow broad-based recovery might score higher than a steeper but narrow recovery. I’m not sure how or if Markit can adjust for this.

It was a point I made about the RICS survey.

There is nothing wrong with this, so long as it is recognised in the interpretation of the data. And I know that the economists at RICS are very aware of it.

For the record, I’m a long-term fan of the RICS quarterly construction survey, warts and all. It has the very useful quality (or fault if you want to it to compare directly with the ONS data) of being biased towards the early stages of construction. So it should rise or fall ahead of construction output.

To wrap up, the real lesson to be learned, in my view, from the (perhaps frustrating) difference between construction indicators is not which is wrong or which is right, but the usefulness of each of the indicators and how they can be used together to gain a better insight into the bizarre industry most of us have grown to love.

The fact that the surveys from time to time appear to be telling different stories is not a bad thing.